26 Day 26 (April 24)

26.1 Announcements

Should be caught up on April check ins

Send me (thefley@ksu.edu) and Aidan (awkerns@ksu.edu) an email to request a 30 min time slot between May 1 and May 9 to give your final presentation. When you email us, please give 3 dates/time that work for you.

Questions from journals

- Comment about making progress at this stage of your project (link to book I mentioned)

- “When deciding between two different sources of information that might conflict with one another. Would it be better to mark one as being less reliable than the other? For instance in the aphid example the math teacher just gave a cursory glance while the biology teacher was more careful with her counts. If the data is theoretically the same would it be better to use the biology teacher’s data only?”

- “If we were to fuse the NOAA data with the at home data, is there a way to assign a higher weight to what we believe is the more accurate data or would the variance account for that?”

- “I have some questions of simulating data. I was wondering if we would be able to use the MCMC method to to that especially if we have missing data from the factors and the wanted data as well. Is there another method that would be better?”

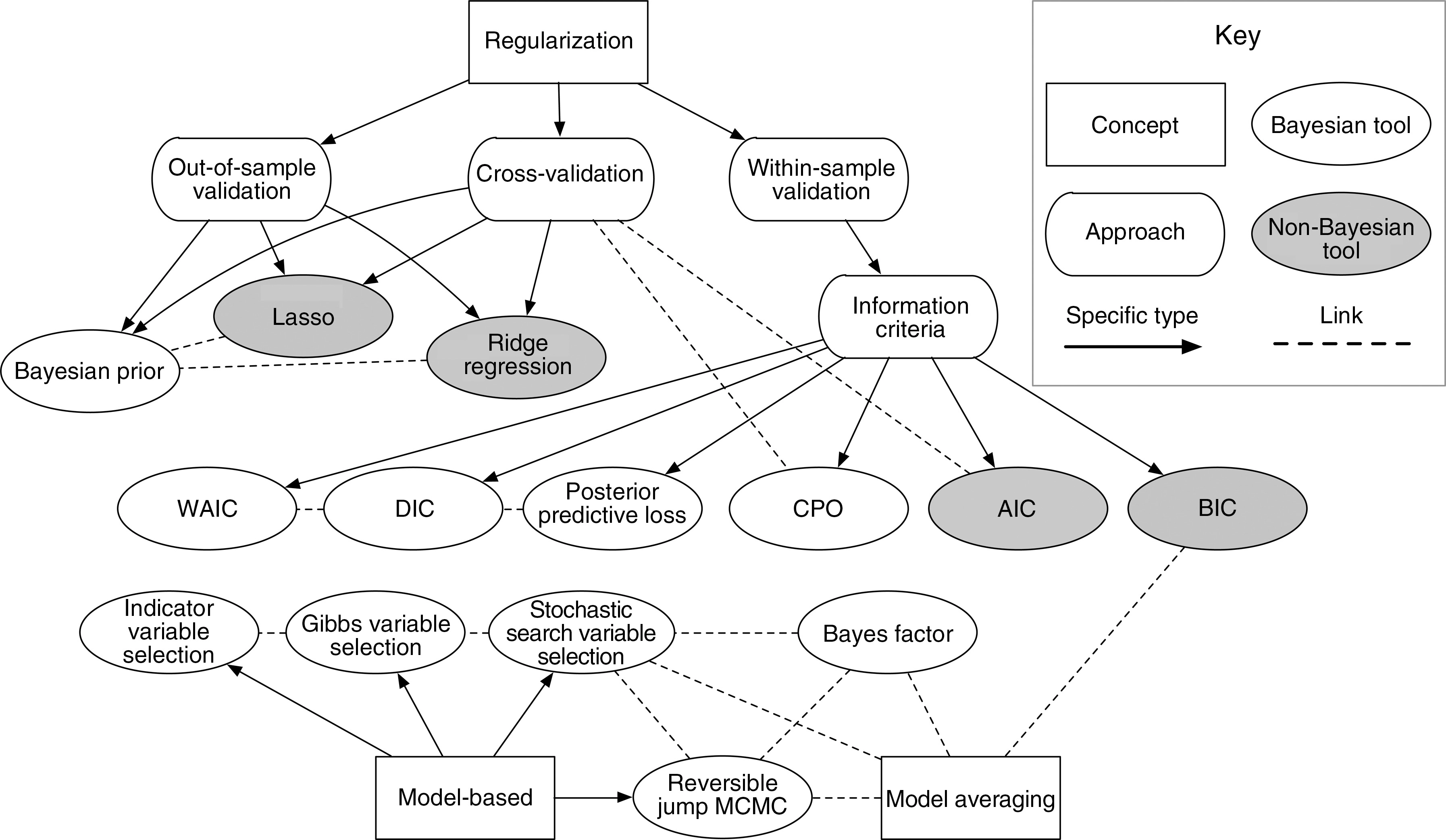

26.2 Model selection/comparison

What is covered today is selected material from Chs. 13 - 15 of BBM2L.

If you have more than one model for a given dataset/problem how do you determine which one(s) to use for prediction and inference?

Predictive performance metrics

- Information criteria vs. scoring functions

- Important characteristics of a predictive distribution (example using Day 14 notes; maximize the sharpness of the predictive distributions, subject to calibration)

- Good resource (here)

Live example using DIC R code

Live example using regularization R code

Live example using Bayesian model averaging R code